Since the introduction of Apple M1 Max chip-based notebooks, tests and comparisons with Nvidia, AMD and Intel processors and graphics cards have shown far from perfect results. Twitter user David Huang compared the video codec performance of the Apple M1 Max processor with an Intel Arc A380 graphics card.

In terms of CPU performance, Apple is on top of the game, but that comes at the expense of graphics performance. The Nvidia RTX 30 series graphics cards spend almost a third less time rendering than the Apple M1 Max. Now a comparison was made between the Intel Arc A380 graphics card and the integrated graphics in the Apple M1 Max.

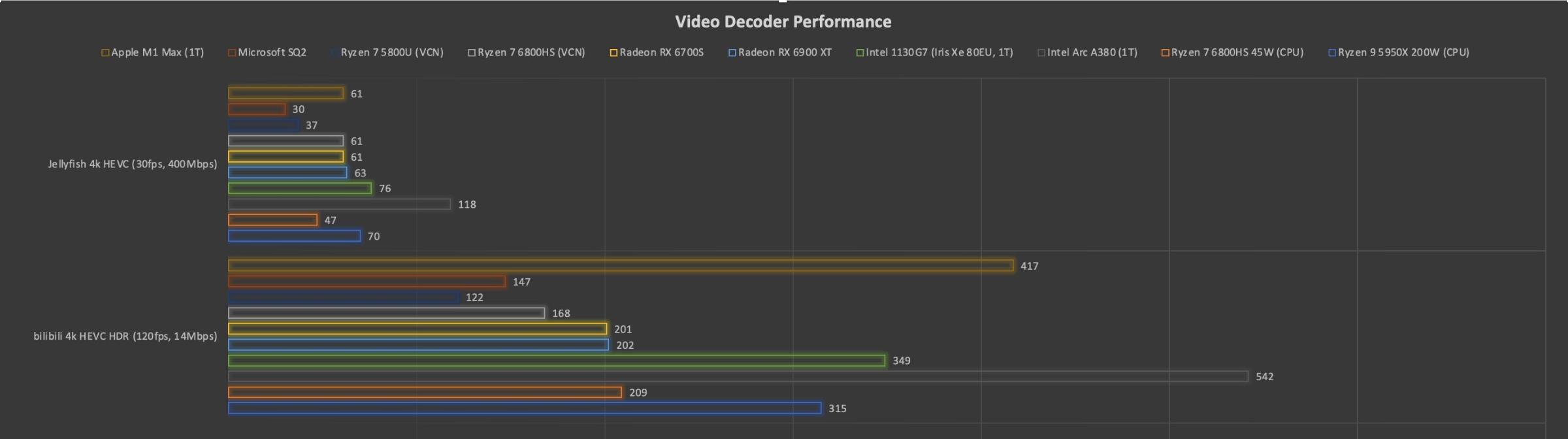

The video decoding unit performance of the M1 Max is inferior to the Arc A380. In extreme cases, such as ultra-high bitrates, the decoding speed is half as fast, and the lag at low bitrates is relatively small.

The M1 Max has two media blocks, just like the Arc A380. Parallel decoding of more than two video streams can double the frame rate, which comes in handy for video playback.

In the Jellyfish 4K HVEC (30 fps, 400 Mbps) and bilibili 4K HVEC HDR (120 fps, 14 Mbps) video streaming tests, the Apple M1 Max bitrate was 61, while the Arc A380 bitrate was 118, nearly double that. In the latter case, the Apple chip offers 417 bits versus Intel’s 542 bits.

That said, Apple doesn’t support formats like HEVC 422/444 and ProRes. On the other hand, Apple chips are already being sold in laptops and computers while Intel graphics cards are mostly just talked about. Intel graphics cards have problems with drivers, so software support for Apple chips is much better, WCCFTech writes.